COMMISSION FOR BASIC SYSTEMS

EXPERT TEAM TO EVALUATE THE IMPACT OF CHANGES OF THE GOS ON GDPS

FINAL REPORT

Toulouse, France, 9-11 March 2000

1. OPENING OF THE MEETING (agenda item 1)

1.1 The meeting of the Expert Team chaired by Mr Terry Hart was held, at the kind invitation of the Météo-France, at their International Conference Centre in Météo-France Toulouse, France, from 9 to 11 March 2000. Mr. Gérard De Moor, Deputy Director CNRM on behalf of Météo-France, while opening the proceedings of the COSNA Workshop held prior to this meeting, also welcomed the participants of the Expert Team meeting. Mr. Terry Hart opened the meeting, welcomed the participants, thanked them for their contribution to the work of the team to date, and gave an overview of the relevant activities carried out by the Team largely by electronic mail.

1.2 Mr Hart noted that the 9th Session of the previous Working Group on Data-processing (held in Geneva, November 1997) had established a sub-group on Assessing Impacts of Changes to the GOS with Gilles Verner (CMC) as convenor. This sub-group started work on a list of tasks, initially considering the sensitivity of verification scores to the climatology used. Most of this contribution was made by Gilles Verner and his team at CMC. However, the sub-group lapsed, as it became evident that a new CBS working structure was to be established. He noted that this newly formed team carries on the essence of the earlier sub-group into the new OPAG team structure and has been assigned the task:

To plan and co-ordinate analyses of the NWP verification exchanged by the GDPS and use them as appropriate to develop methods and procedures for assessing planned and unplanned changes to the GOS on the operation of the GDPS.

Our team has been asked for two deliverables initially:

a report on how analyses of NWP verification can be used to assess positive and negative impact of changes to the global observing system on the operation of the GDPS, particularly NWP, and

a set of guidelines to be used where action is required to minimize the impact of a loss of observations on the operation of the GDPS.

1.3 Mr Morrison Mlaki, Chief Data-processing System Division, WWW Department of WMO, thanked and expressed appreciation to Météo-France for hosting the meeting of the Expert Team to evaluate the impact of changes of the GOS on NWP, and welcomed the participants. He recalled that CBS-Ext.(98) identified the main elements of the work programme for each OPAG and consequently listed the relevant tasks of the OPAG on DPFS and the delivarables of the Team defined by the CBS AWG as indicated by the chair.

1.4 Mr Mlaki further noted that the CBS Implementation/Coordination Team on Data Processing and Forecasting Systems in November 1999 reviewed the impressive progress achieved by the team. It noted that the developed guidelines addressed to members will need to be refined and clearly identify who does what. He informed members the satisfaction and congraulation expressed by the CBS AWG for impressive amount of work had been accomplished by the Team via correspondence.

1.5 Mr Mlaki invited the meeting to consider and recommend mechanisms for implementation of its findings and guidelines on an operational basis which could include the need for lead centers in these activities.

1.6 Mr Mlaki paid tribute to the Chair and to all Members of the Team for their outstanding contributions to the work of the Team and for what you will all achieve in this meeting. He wished the meeting every success and once again thanked the hosts for the excellent facilities provided for the meeting.

2. ORGANIZATION OF THE MEETING (agenda item 2)

2.1 Approval of the agenda (Agenda item 2.1)

2.1.1 The meeting adopted the agenda given in Appendix I.

2.2 Working arrangements for the meeting (agenda item 2.2)

2.2.1 The meeting agreed on its working hours, mechanism and work schedule.

2.2.2 There were 10 participants at the meeting as indicated in the list of participants given in Appendix II.

3. USE OF NWP VERIFICATION STATISTICS TO ASSESS IMPACT OF CHANGES TO THE GOS (agenda item 3)

3.1 The meeting noted that in principle, NWP verification statistics potentially provide a tool to assist in monitoring the operation and performance of the Global Observing System (GOS). If the statistics could be used to assess impact of changes in the GOS, they would complement the previous Observing System Experiments (OSEs) or Observing System Simulation Experiments (OSSEs) studies, vindicating the claims made in advance, adding credibility to such design procedures and confirming the delivery of improved performance to operational products.

3.2 It should be noted, however, that the routinely exchanged scores only measure broad-scale features from operational global models. To enhance the effectiveness of the analysis of the impact of changes in the GOS, it would be beneficial to include impacts on smaller-scale NWP and on other non-NWP aspects of GDPS operations. However the meeting agreed that it was not feasible to assess these issues in the time available. A more comprehensive evaluation of the impact of changes in the GOS on NWP will require development of these aspects

(a) Feasibility of the task

3.3 On the face of it, the use of NWP verification statistics to detect the impact of changes in the GOS would appear to be straight-forward. Many Observing System Experiments (OSEs) or Observing System Simulation Experiments (OSSEs) have shown potential impact of new or improved observation types and have been used to justify the development or extension of observation platforms. Predictions based on the NCEP and ECMWF re-analyses show increasing skill as the observation base has improved (e.g. Kalnay et al., 1998). However, systematic retrospective use of operational verification statistics appears to be less common. In principle, such assessments could be useful in confirming the anticipatory studies and demonstrating the real impact of both improvements and deterioration in the observing system.

3.4 On the other hand, there are some good reasons for caution as indicated by comments based on experience from several members of the team. The meeting noted the recommendation of the 1997 Workshop on impact studies that:

OSE should always address scenarios in which "observation increments" are big enough in order to get a signal, which can be extracted, from the intrinsic noise of the data assimilation system

In practice, some factors making it difficult to use the verification statistics are:

i) OSEs indicate that the change in observations must be significant in scale and geographical coverage for a signal to be detected; conclusive results cannot be obtained when the volume of observations is small, or the period of verification too short,

ii)) the performance of NWP systems is affected by other factors such as changes in the analysis and prediction components,

iii) there is significant natural variability in predictability of the atmosphere,

iv) the NWP centres differ in the types and quantity of observations used,

v) there are still some differences in methods for computing the scores.

3.5 To make any assessment of the impact of a change in the GOS using operational verification statistics, the impact of these factors needs to be recognized and minimized. Some progress is being made on some of the factors through further standardization in the computing of verification scores and greater dissemination of information on system changes at each centre.

3.6 A prerequisite for such a review is knowledge of any changes in the NWP systems at each centre, either in analysis and prediction schemes or availability of observations. Some information is transmitted with the verification statistics or in other publications, and some centres maintain a history on their Web site. Such information serves a broader purpose of assisting staff in the NHMSs and other users in their assessment and application of the NWP products.

3.7 Studies of observation impact should include verification against observations if possible as well as against analyses. Verification against analyses do favour the model itself, and in the extreme limit the scores against analysis may be better without any observations than with some, since the model will more agree to itself. Most of the time the signal is the same against analysis and against radiosondes, but sometimes it differs, and as far as the bias are concerned for instance, the scores against radiosondes are the only reliable method.

3.8 Despite the general caution noted above, the operational verification statistics do have some beneficial characteristics. One is that they are ongoing and consequently represent a long time series. OSEs are generally conducted for a short period of weeks or at most months and consequently it is often difficult to establish statistical significance. Secondly, it may be that the comparison of verification from several centers may allow detection of a signal, which may not be apparent in the results of a single centre. Inter-centre differences, in cases where the impact of observing system changes, is not simultaneous, may reveal trends not evident in the time series from a single centre.

3.9 In view of these characteristics, the Team recommended a general methodology for the use of verification statistics to investigate the impact of changes in the observing system:

i) list planned, possible, and actual changes to the GOS which we need to assess.

ii) survey existing observation impact experiments, for results relating to the type of changes listed in 1.

iii) apply judgement to extrapolate the results from 2, to predict the probable impact of changes in 1.

iv) assess operational verification results before and after the changes, to see if they reflect (within normal variability) the changes predicted in 3. Statistical tests should be used to determine the significance of any observed impacts.

3.10 A note of caution is that the "extrapolation" in step 3 can occasionally lead to an unacceptably wide range of possible interpretations. However, the aim of step 4 is that any interpretation from impact experiments can be given greater confidence if the pattern of any detected response from the operational verification statistics conforms to that found in previous OSE studies.

3.11 The following case study applied this method to a particular case, for which many OSEs have been conducted and the nature of the expected impact is well known. The main emphasis is on examining the differences among the results from the various centres in the routinely exchanged verification statistics.

(b) Case study

3.12 On 26 February 1999 the TOVS instrument on NOAA-11 failed. As a consequence the coverage of satellite soundings distributed by NESDIS on the GTS was halved. NOAA-15 had been launched in May 1998 with its new AMSU instrument. While radiances were available, the coding of these and the derivation of soundings of temperature and moisture had not commenced. Some NWP centres had access to radiances and had the capability of using these directly in their analysis system (e.g. variational analysis schemes). Other centres relied on NESDIS to resume production and transmission of, firstly, the SATEM messages (500 km resolution) on 5 May, and then the higher resolution BUFR messages on 17 August. An informal group from several centres assisted NESDIS in testing the codes and the contents of the messages to expedite the resumption. There were some problems initially with the coding of the messages. NESDIS also reported occasional problems with the quality of the retrievals.

3.13 This case study is a good candidate to test the usefulness of the operational verification statistics as the impact of TOVS is well known. It has a large impact on predictions in the southern hemisphere but a much less marked impact in the northern hemisphere and the tropics. In this case, there was a significant perturbation in the observations available (although some TOVS continued) and differences in the recovery process among the centres.

3.14 The responses (as far as is known) as provided by the team members or official documentation and other known system changes are listed in the Table below.

Chronology of the loss of NOAA-11 TOVS data and the response from global NWP centres

26 February |

Soundings from NOAA-11 cease |

8 March |

US NCEP start using NOAA-15 radiances in their variational analysis scheme |

8 March |

Meteo-France switch to using NOAA-14 120 km radiances rather than 500 km SATEM retrievals |

9 March |

ECMWF - Number of vertical levels increased from 31 to 50; parallel tests indicate little impact on tropospheric performance. |

29 March |

UKMO commence using radiances from NOAA-15 |

(March |

US NCEP - verification against analysis results not available for 16, 17, 21-29 March) |

5 May |

Distribution of SATEM messages from NOAA-15 commences. Australian system commences using these observations |

5 May |

ECMWF commence using raw radiances from NOAA-14 and -15 |

6 July |

JMA commence using NOAA-15 SATEMs |

13 July |

ECMWF change quality control on radiances, with tests indicating largest positive impact over the southern hemisphere but neutral impact over northern hemisphere |

17 August |

Transmission commences of BUFR messages containing 120 km resolution soundings and radiances. |

3.15 By the end of 1999 Australia had still not commenced using the 120 km retrievals from NOAA-15 due to changes in the format of the messages and Meteo-France were using only NOAA-14 120 km radiances. CMC were using NOAA-14 SATEMS only after a parallel test of NOAA-15 SATEMs showed negative impact. JMA commenced using NOAA-15 120 km messages on 27 January 2000.

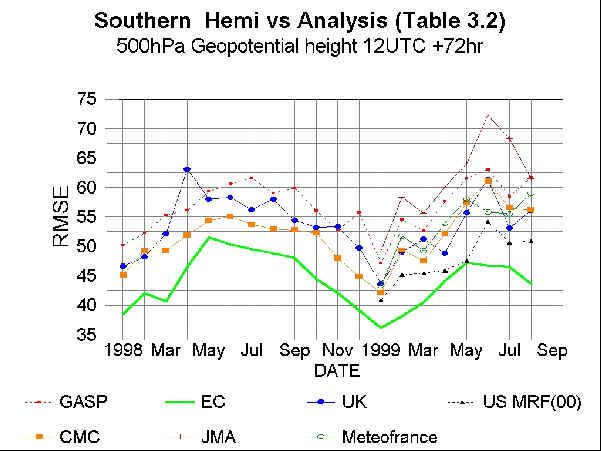

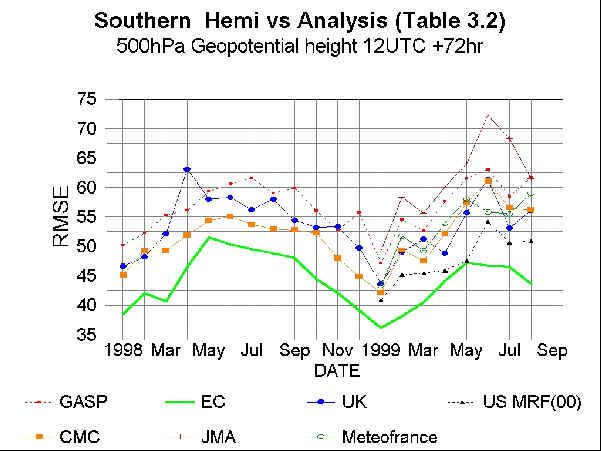

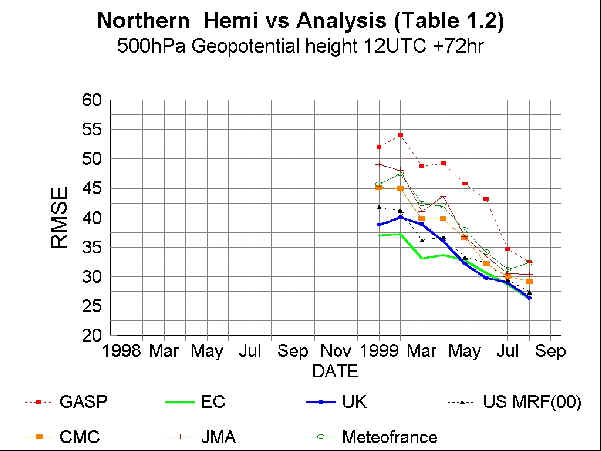

Examination of the time series of the routinely exchanged verification statistics does show some differences among the centres. Attached are time series showing the rms error of the 72 hour predictions of geopotential height for the southern and northern hemisphere, computed from the analyses (i.e. Tables 3.2 and 1.2 of the CBS verification statistics). The base time is 12 UTC although the results for the US MRF with a base time of 00 UTC are included.

3.16 It appears possible to match some of the perturbations with the responses in the above table. For the Southern Hemisphere there is a significant increase in rms error from February to April 1999, for five of the seven centres represented. UKMO showed a decrease and US-MRF only a slight increases. These were the only two centres using NOAA-15 data by April. The relative performance of Australia (GASP) and JMA appears to be affected as NOAA-15 data are incorporated, in the Australia system. The performance of the ECMWF system after May goes against the trend at the other centres as analysis and use of NOAA 15 radiance data is commenced. For the Northern Hemisphere the time series appear to follow similar trajectories and show less movement relative to each other.

3.17 It should be recalled that there are three changes contributing to any impact - the loss of NOAA-11, the use of the new AMSU instrument and use of radiances. The NESDIS retrievals from NOAA-15 also use the AMSU instrument and the retrievals in cloudy areas should be of higher quality. The impact is unlikely to be as great as with the direct use of AMSU radiances.

3.18 These results are only a sample but are indicative of the changes seen for other fields, forecast intervals and areas. Verification against radiosondes have greater noise in the southern hemisphere due to the relatively low number of stations.

(c) Summary and further action

3.19 While the single case study supported the potential use of the routinely exchanged verification statistics the results are only indicative. The change in the observing system was very large, and there were differences among the centres in the actions taken during the recovery phase.

3.20 The team recommended that a case study be undertaken of another change in the observing system. The reduction in the number of Russian radiosondes during late 1999 was suggested as a case worthy of investigation. Proposals for undertaking such a study are presented in the recommendations below.

Recommendations

Information on NWP system changes

3.21 It is recommended that Centres should provide timely details of major changes to their NWP system including:

(i) date of change

(ii) description of change

(iii) impact of change on verification from parallel testing.

The above details should be available on the Centre’s web site.

A summary should be included in the Centre’s annual GDPS progress report that is provided to WMO.

Extra data monitoring from lead centres

3.22 The Expert Team recognised the need to monitor and exchange information on the availability of data (along the lines of that currently displayed by the ECMWF). Such an exchange of information would alert centres about changes in the GOS. It is therefore recommended that the Lead Centres and centres participating in monitoring should extend their monitoring procedures to include information about regional variations in data volumes. Such information could include counts by:

(i) WMO block number

(ii) latitude /longitude box

(iii) individual platforms.

These counts should be compared with long-term averages to indicate areas of declining reports.

For each type of observation, the lead centres should consult other centres and agree on the exact procedures for monitoring, information exchange and alerting Members and WMO about problems.

Procedure for using verification statistics to assess the effect of changes to the GOS on NWP

3.23 Should the proposed verification case study (which will assess the effect of the loss of Russian sondes on NWP) as described below find a significant signal in the verification, then it is recommended that:

the monitoring and verification systems of Lead Centres/centres should be modified as recommended following results from the case study.

3.24 It should be noted that if no signal is detected in the verification study, this does not imply that loss of radiosondes has no impact.

Irrespective of the outcome of the verification case study, it is recommended that (on the basis of monitoring results):

(i) each relevant centre, Member and WMO Secretariat should implement the guidelines on actions required to minimise the impact of a loss of observations on the operations of the GDPS

(ii) Members should develop procedures for notifying users in the event that changes to the GOS result in significant degradation of NWP products.

Proposal for a case study

3.25 Terms of Reference for evaluating the impact of the reductions in the radiosonde network over Russia.

Working Hypothesis:

3.26 It is possible to establish a meaningful impact of reductions in the radiosondes network in Russia through evaluation of readily available verification scores of global and/or regional scale operational forecast models. Meaningful here implies finding a statistically and meteorologically significant signal above the noise expected from variability in skill as a function of, for example, seasonal trends, circulation regime, predictability considerations, changes in data assimilation systems, model changes, and general data access, availability, and quality control issues.

3.27 The following outlines the procedure agreed upon by the Team for assessing the validity of this hypothesis.

3.28 The evaluations are to be performed primarily by members of the Team, or their designees, at each participating institution based on the available verification and related data sets available. The level of participation of a center will be determined as it sees fit. The evaluation is under the auspices of the Team Chair and reports to him. The WMO will provide a consultant to the Team for support of the evaluation. His (her) duties will be that of coordinating activities, facilitating provision of necessary information and data exchange, and compilation and documentation of results, conclusions and recommendations. The time frame of this study requires appointment of a consultant no later than 1 May 2000, submission of a draft report to the Team Chair by 1 September, and submission of the final report to the WMO by 1 October 2000.*

* The Chair of the Task Team will provide the exact terms of reference for the WMO consultant, following further consultation with the members of the Team.

3.29 A necessary minimum requirement for this study is relevant documentation of the location and times when subject Russian RAOBS were and were not available for use in operational NWP. Said information is available from monitoring statistics from ECMWF, probably NCEP, and possibly from other centers. It is assumed participants will have access to CBS Monthly verification statistics, as well as regional and daily scores from their home institution for at least their own model(s). It is expected that monthly averaged statistics will not suffice for detecting any impacts that might exist.

3.30 Analysis of data will consist first of comparing verification scores by region relative to: 1) scores before and after data loss and 2) comparable periods in previous years. This could be done locally relative to lost data at shorter ranges and downstream of likely impacts at longer leads. Any impacts apparent or suspected are to be appraised relative to statistical and meteorological significance. This includes statistical analysis of variability in scores from previous years for comparable periods and performing standard statistical tests of significance*. Further evaluation can be accomplished through stratification of scores over years meteorologically similar, i.e., analogues, in terms of such factors as, La Nina (as for the `99 -2000 fall/ winter), measures of circulation regime, e.g., PNA, and NAO indices (from CDC, Boulder). Another suggested approach is to assess whether any decrease in skill during the period in question is related to intrinsic forecast difficulty via relating it to the ensemble spread from operational models (ECMWF, NCEP). Thus, a decline in skill in the face of high predictability (small spread) is more significant than if associated with more difficult (large spread) situations. Further use of ensemble information based on perturbations to initial conditions is available by examining cases where the verification falls out of ensemble envelope. In such cases, especially at shorter ranges where model error is likely small relative to analysis difficulties, analysis errors must be especially large compared to typical analysis errors encompassed by initial state ensemble perturbations. Also, when any individual member of the ensemble verifies well, when the control does not, indicates that any analysis error is within bounds of expected analysis errors. Finally, individual case studies can be examined to illustrate problems, or lack thereof, illustrative of the overall statistics and/or to isolate the more significant instances of impact. Consideration should also be given to the evaluation of analyses relative to independent information (cloud imagery) and first guess. If judged necessary, participants in the evaluation may recommend confirmatory OSEs, possibly based on data denial using Reanalysis data sets.

*It should be noted that verification statistics against radiosonde could be affected by closure of stations in the list used for verification.

Other verification issues

3.31 The Team briefly discussed other issues of the CBS verification system. Examples of the possible impact on using different climatologies in computing the anomaly correlation scores were examined. Results showed that the impact could be significant in some cases. Therefore, in order to help standardize the CBS verification methodologies, it is recommended that to the extent possible, it is desirable to compute anomaly correlation from a common climatology (which could be provided by ECMWF, NCEP or another centre). Arrangements should be made to have a reliable climatology available to all the centres for the purpose of this computation; this may possibly be done through the WMO ftp server. The Team was informed that the Expert Team looking into the verification of long-range forecasts is also considering the effects of the reference climatology.

3.32 On the topic of the standardization of verification procedures, all centres participating in the exchange of CBS scores should adhere, to the extent possible, to the recognized procedures, as laid down in the Table F of the Manual on the Global Data Processing System. Further clarification of these procedures may be required from time to time (e.g. use of reference climatology). On-going assessments of the exchange of verification scores and of the methods used needs to be carried out in the work of the OPAG DPFS.

3.33 The extension of the current exchange to include daily scores was also discussed. The Team concluded that there was not sufficient need at this time to include the exchange of daily statistics. However, the Team noted that these scores are available at each centre and could be made available if required (e.g. to the WMO consultant).

3.34 Other suggested approaches to the analyses of verification statistics in the context of observing system studies were:

systematic differences between scores for 00 and 12 UTC

impact of data cutoff times (e.g. MRF and AVN)

verifications valid at the same time but with base times 6 hours apart.

4. GUIDELINES WHERE ACTION IS REQUIRED TO MINIMISE THE IMPACT OF A LOSS OF OBSERVATIONS ON THE OPERATION OF THE GDPS (agenda item 4)

4.1 There are occasions when significant components of the Global Observing System (GOS) are lost for one reason or another. In some cases this is planned or at least known beforehand such as the shut-down of the Omega system on 30 September 1997. In other cases the loss is unplanned such as the failure of satellite soundings from NOAA-11 in February 1999 before soundings from NOAA-15 were fully operational. Another type is the reduction in the network due to economic factors which limit the capacity of NMHSs to maintain their observing networks. These may be rapid changes or more gradual. The Year 2000 rollover was an instance where effective action was taken to minimize the impact of an anticipated loss of observations.

4.2 This Expert Team was tasked by CBS with preparing guidelines for action to minimize the impact of such losses. The following draft guidelines are intended to address a range of circumstances focusing on the short-term changes. The CBS project to redesign the GOS through the Future Composite Global Observing System addresses the problem of the longer term deterioration of the upper air observing network in particular.

4.3 The guidelines are based on experience with the shutdown of the Omega system and the action taken on Year 2000 compliance. The action proposed addresses the various phases into which such problems can be divided.

4.4 While the guidelines focus on a loss of observations, they could just as well apply to maximising the impact of positive changes in the GOS. Such situations may arise as part of a planned program change such as targeted observations, special observing period data or implementation of a new observing platform. A need for information on a coordinated basis is also required in such situations to allow the Data-processing system to both cope with and optimally use such new observations.

4.5 Alerting

4.5.1 The change in the observing systems may be known in advance or it may be unplanned.

For known changes:

Operators should provide notification to WMO following the current procedures where these are specified.

otherwise information should be provided giving adequate notice through means such as:

official advice from the Secretary-General to NMHSs

notification through the Technical Commissions, in particular CBS and CIMO

use of specialist groups established for particular observation types (e.g. satellite soundings)

a data user’s e-mail news group as described below

Unplanned changes:

Lead Centres should maintain reliable monitoring procedures to detect any problems

RTHs should identify any communications problems restricting the flow of data

Lead Centres should alert data providers (if necessary) through designated contact points (such as the Technical Coordinator of the AMDAR panel). The WMO Secretariat may be able to assist in identifying relevant contact points.

4.5.2 Problems should be detectable with the quantity and quality monitoring systems established within CBS. Although many centres carry out data monitoring, the Lead Centres responsible for particular observation types should alert the operators and user community to a potential problem, particularly if the loss is due to a change in quality rather than loss of the observations itself. (Such changes may be due to changes in calibration of satellite instruments which may not be apparent to all users). For the NHMSs this alerting can be through designated points of contact. The established network of Focal Points for Data Quality Monitoring was not established for this purpose but may be suitable. This network need to be updated regularly

4.6 Assessment of the problem

Define the nature of the problem

collect authoritative information from operating agencies

obtain guidance from relevant technical experts within CBS or other commissions

Obtain information on the scope of the problem and the timing of planned changes

range of users and programs likely to be affected

geographical extent

duration of the problem (if temporary)

Assess the likely impact on a range of users

NWP

general use of the observations in the operations of NMHSs such as forecasting, climate or marine services

other WMO programs especially GCOS, hydrology, GAW

other WMO commitments e.g. Office for the Coordination of Humanitarian Affairs (OCHA), GCOS, IPCC, Montreal Protocol.

4.6.1 The WMO system should act as an advocate for the broad range of its members and users and be aware of the sensitivities of NHMSs and programs to data losses.

4.6.2 For NWP the impact can be based on surveys of previous observation impact studies and the work of organizations such as NAOS, COSNA, and EUCOS, the CBS OPAG on Integrated Observing Systems and the DPFS OPAG expert team. These studies can be used as the basis for an extrapolation to the current observation problem.

4.6.3 The assessment of the problem needs to be a collective effort. However, suitable individuals to initiate and coordinate action may be the Chairs of the OPAGs on the Integrated Observing System or the Data Processing and Forecasting System, or if the problem is confined to within one or two regions, the appropriate Regional Association Chairs of the regional working groups on Implementation coordination of the World Weather Watch.

4.7 Problem prevention where possible

4.7.1 For example:

Make submissions to data providers to influence decisions

4.7.2 Expert and representative impact assessments provide an authoritative basis for such submissions. This strategy was used unsuccessfully in the case of the Omega system, but is being used to preserve the microwave frequencies allocated for meteorological and remote sensing purposes. It may also be used to make representations to NMHSs on planned closures of particularly valuable observing stations.

Dissemination of information to highlight the impact of the loss of observations

form alliances with other users affected (e.g. radioastronomy in the case of microwave frequencies)

Responsibility for such tasks is best suited to the Secretariat.

4.8 Investigate mitigation strategies

preserve maximum components possible of the observation

e.g. in the case of the Omega system WMO advocated continuation of soundings for temperature and humidity even if wind observations were not possible

assist in implementing replacement systems

e.g. in the case of soundings from NOAA-11 an informal group worked by e-mail with NOAA/NESDIS to facilitate the implementation of the new message type for soundings from NOAA-15 through message decoding and testing, feedback on errors and sharing information among the user community. The process was assisted by a responsive attitude and helpful advice from NESDIS.

use alternative sources of data

This may be a longer strategy as in the Future Composite GOS but there may be short term possibilities such as use of AMDAR ascent and descent profiles for radiosonde flights. CBS can assist by providing information and training in such possibilities.

establish back-up systems (e.g. satellites)

build in redundancy in the GOS

This may also be a longer strategy for the Future Composite GOS.

(Responsibility: CBS)

4.9 Finding and allocating resources to ameliorate the problem

4.9.1 This could range from funding for particular observation types to relocation of backup satellites. The impact statements referred to above may be used in developing priorities for allocation of funding. Other criteria may be:

Effectiveness in ameliorating the problem

Reliability

Meeting of functional requirements (e.g. reaching to 5 hPa for GUAN radiosondes)

Quality (e.g. as assessed through the Lead Centre monitoring)

Long-term continuity (especially for GCOS)

Support for multiple programs

Unique characteristics

(responsibility: Members)

4.10 Monitoring the problem

assessing extent of the problem and comparison with projections

fine tuning and adaptation of responses where possible.

4.11 Post-event Review

4.11.1 After the event conduct a review to record any lessons learnt and document any procedures for future events.

(responsibility: CBS)

Administrative aspects

4.12 This second point worked successfully in the case of the termination of the Omega system. The team made recommendations to alleviate the problem and to assign priorities for use of the available funding for installation of alternative (GPS) radiosondes.

utilize existing formal and informal groups within CBS and WMO in general (CIMO, CAS, CCl, ..)

if the problem is of a significant scale establish a Task Team to analyze the problem, share existing information and develop expert advice.

Additional Comments

4.13 Under the Alerting category, the meeting considered whether the current mechanisms for monitoring data availability are adequate. The recommended enhancement of the roles of the Lead Centres (see Para. 3) is also relevant here. The team also noted that the guidelines could be used to address ongoing minor problems with the available information on the global observing system (e.g. Meta data), which lead to an effective loss of observations. In particular, problems with the reported heights of synoptic reporting stations (in Vol. A of WMO Publication 9) reduce the effective use of this information and can degrade analyses. The actions of some centres in developing "black lists" or bias corrections were noted. Such information could be shared among NWP centres.

Recommendations

4.14 It was also recommended that the Lead Centre quality monitoring process be made more transparent with the list of stations identified as having problems being made more widely available to Members.

Other recommended actions to provide more timely alerts to Members to changes in the GOS were:

Members should establish a Data Users e-mail newsgroup

The Secretariat should implement flashing "What's New" icons to links on the WWW section of the WMO Web page referring to recent updates on the GOS

5. POSSIBLE OTHER ROLES AND FUTURE TASKS (agenda item 5)

5.1 The meeting discussed the work plan necessary to complete its allocated task. These are:

to participate in and review the results of the verification case study on Russian radiosondes

to prepare by 1 September a final set of recommendations in a report for the next session of CBS.

5.2 The meeting also identified roles and tasks which need to be maintained on an ongoing basis within the OPAG on DPFS, by the ICT, other expert teams or designated focal points. These were:

to represent the broad needs of GDPS operations (including both its scope and regional variations) to other relevant teams within CBS such as the Expert Team on Observational Data Requirements and the Redesign of the GOS

to further develop the data monitoring role of centres and to review the procedures and scope of the exchange of verification scores of NWP products. This role could be carried out by the ICT or it may be considered beneficial to designate a focal contact person.

If the potential of the verification scores in assessing the impact of changes in the GOS is demonstrated, to establish an ongoing mechanism and procedure for the analysis of the scores and attribution of causes.

6. CLOSURE OF MEETING (agenda item 6)

6.1 The meeting was closed on Saturday, 11 March 2000.

Appendix I

AGENDA

1. OPENING OF THE MEETING

2. ORGANIZATION OF THE MEETING

2.1 Approval of the agenda

2.2 Working arrangements for the meeting

3. USE OF NWP VERIFICATION STATISTICS TO ASSESS IMPACT OF CHANGES TO THE GOS

4. GUIDELINES ON ACTIONS REQUIRED TO MINIMISE THE IMPACT OF A LOSS OF OBSERVATIONS ON THE OPERATION OF THE GDPS

5. POSSIBLE OTHER ROLES AND FUTURE TASKS

6. CLOSURE OF THE MEETING

Appendix II

LIST OF PARTICIPANTS

| AUSTRALIA | Mr Terry Hart, (Chair) Bureau of Meteorology GPO Box 1289K MELBOURNE VIC 3001 Australia Tel: (613) 9669 4030 Fax: (613) 9662 1222 Email: t.hart@bom.gov.au |

| CANADA | Mr Gilles Verner Canadian Meteorological Centre 2121 Trans-Canada Highway DORVAL QUEBEC Canada H9P 1J3 Tel: (1 514) 421 4624 Fax: (1 514) 421 4657 Email: gilles.verner@ec.gc.ca |

| FRANCE | Mr Bruno Lacroix Météo-France 42 Avenue Coriolis 31057 TOULOUSE CEDEX France Tel: (33 5) 6107 8270 Fax: (33 5) 6107 8209 Email: bruno.lacroix@meteo.fr |

| JAPAN | Mr Nobutaka Mannoji Japan Meteorological Agency 1-3-4 Otemachi Chiyoda-ku TOKYO 1OO Japan Tel: (813) 3212 8341 ext. 3320 Fax: (813) 3211 8407 Email: nmannoji@npd.kishou.go.jp |

| UNITED KINGDOM | Mr Mike Bader UK Met Office London road Bracknell BERKSHIRE RG12 2SZ United Kingdom Tel: (44 1344) 856 424 Fax: (44 1344) 854 026 Email: mjbader@meto.gov.uk |

| Mr Richard Dumelow UK Met Office London road Bracknell BERKSHIRE RG12 2SZ United Kingdom Tel: (44 1344) 854 489 Email: rdumelow@meto.gov.uk |

|

| USA | Dr Steve Tracton National Weather Service NOAA 5200 Auth Road CAMP SPRINGS MD 20446 USA Tel: (1 301) 763 8000 ext 7222 Fax: (1 301) 763 8545 Email: steve.tracton@noaa.gov |

| ECMWF | Mr Antonio Garcia-Mendez ECMWF Shinfield Park READING BERKSHIRE RG2 9AX United Kingdom Tel: (44 118) 949 9424 Fax: (44 118) 986 9450 Email: a.garcia@ecmwf.int |

| WMO | Mr Morrison Mlaki World Meteorological Organization 7 bis, avenue de la Paix Case postale No. 2300 CH-1211 GENEVA 2 Switzerland Tel: (41 22) 730 8231 Fax: (41 22) 730 8021 E-mail: mmlaki@www.wmo.ch Mr Dieter Schiessl (initial part of meeting) |